As part of our IPv6 deployment we had to upgrade the firmware on our CPEs. We have a small variety of different models, but the majority of them are based on a Broadcom chipset. This firmware upgrade included all the features we needed for IPv6, the DHCPv6 client for the WAN, RA announcements on the LAN etc, but it also included other non-related IPv6 fixes and enhancements.

We spend a lot of time and effort regression testing these firmware pushes, and are generally pretty confident in it by the time we go to mass push it out via TR69. However, shortly after the firmware upgrade we started hearing complaints that this firmware had broken a very specific use case that we hadn’t obviously tested for.

IPv6 tunnels such as the 6in4 ones offered for free by Hurricane Electric. Odd, we hadn’t started the enablement of native IPv6 prefixes for these customers yet, but we did deploy it with ULA RAs enabled, could that be affecting things? We didn’t think so, but we had to investigate obviously.

Problem Statement:

6in4 tunnel client configured behind the router, inside the DMZ (not firewalled).

6in4 tunnel server on the internet, provided by Hurricane Electric.

Tunnel establishes correctly. Client gets an IPv6 prefix, can ping6 tunnel end-point as well as other v6 connected servers on the internet.

However, TCP sessions don’t establish over the tunnel.

Diagnosing:

ICMPv6 echo requests and replies are passing fine, so it doesn’t appear to be an obvious routing issue.

First step is to bust out netcat, tcpdump on the client and watch the TCP 3-way handshake and see how far we get.

We see the initial SYN go out fine, and then the reply SYN/ACK from the remote v6 host and we send the replying ACK. All looks good, myth busted, time for the pub….. but wait.. there comes in a duplicate SYN/ACK. Wait what? We send another ACK but yet another duplicate SYN/ACK comes back.

OK, sounds like our ACKs aren’t making it back to the remote host; Time to check the remote side of the session and confirm.

OK, sounds like our ACKs aren’t making it back to the remote host; Time to check the remote side of the session and confirm.

Sure enough, our hypothesis is correct: But why? It’s not a routing issue, we confirmed that earlier. Maybe it’s getting eaten inside Hurricane Electric land for some reason. MTU issues seem likely, it’s always a bloody MTU issue, right? Especially when we start faffing with encapsulating tunnels.

But why? It’s not a routing issue, we confirmed that earlier. Maybe it’s getting eaten inside Hurricane Electric land for some reason. MTU issues seem likely, it’s always a bloody MTU issue, right? Especially when we start faffing with encapsulating tunnels.

Hrm nope this is IPv6, the minimum MTU size is 1280 and that’s definitely enough for a small ACK. Check the he-ipv6 tunnel iface mtu, 1480. Yup, makes sense, 1500-20 bytes for the 6in4 encapsulation. Quick check to make sure we can get at least 1280 through unfragged:

Yup, we’re golden. Can also ping6 up to 1432 bytes unfragged which matches the advertised MSS value above once we include the 8 byte ICMP header (but I didn’t screen-cap that). Side note: There’s also another 40 bytes for the IPv6 header which adds up to the 1480 byte tunnel MTU we confirmed above.

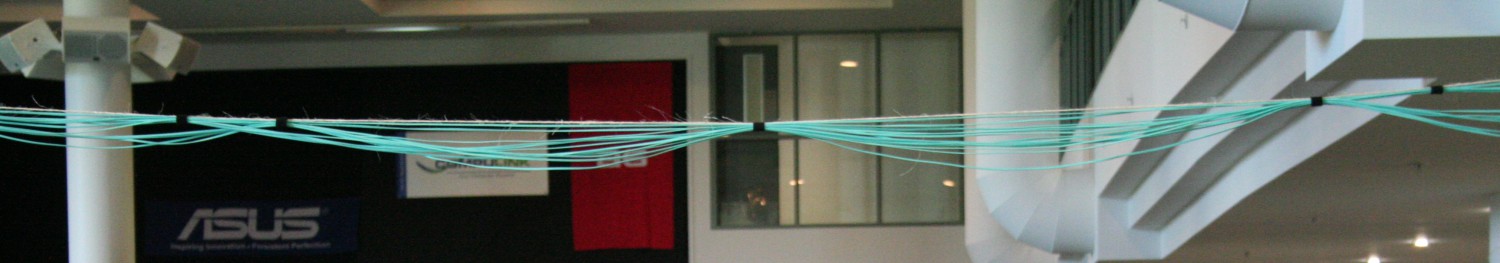

Right, let’s tcpdump on the WAN interface of the router and have a look at the 6in4 encapsulated packets to see what’s up.

Now this is where it gets fun. Tcpdump on the router’s WAN interface…. and the 3-way TCP handshake establishes. Wait….. what? Stop the tcpdump and no new sessions establish successfully.

Riiiiiiiight.. is the kernel looking at the 6in4 payload destination address and throwing it on the ground unless promiscuous mode is enabled? Nope, tcpdumping with -p (–no-promiscuous-mode) and the TCP sessions work again. OK, what if we tcpdump on the internal bridge interface instead of the WAN? That results in the broken behaviour!

tcpdump on WAN iface and TCP sessions work. tcpdump on LAN iface and TCP breaks.

This. Is. Odd.

We spent a wee while going back and forth over a few hypothesis and then proving them wrong. Then after a quick chat with one of our CPE developers, he informs us of an MTU issue they saw a while ago affecting large packets when using the hardware forwarding function of the Broadcom chipset known as “fastpath”. But this shouldn’t affect our small 72 byte packet, and besides, it was fixed ages ago.

This gets us thinking; It would explain why tcpdumping could “fix” the issue by punting the packets to the CPU and forcing software forwarding. Let’s test this theory and disable the hardware fastpath… hrmm nope no dice. Let’s disable another fastpath function, this time a software fastpath function called “flow cache”. Lo and behold, the TCP sessions establish!

Myth confirmed, bug logged upstream with Broadcom, time for a well earned pint.

Cheers to @NickMurison for raising the issue and helping me with the diagnostics.

BCM63168 is the Broadcom chipset in question.

LikeLike

Hi Richard, not sure if you were the person on #ipv6 IRC the other night. I resolved my problem by buying a $29AUD TP-Link router with a QCA9533 chipset (DD-WRT compatible). Thank you.

The modem experiencing this issue was a Technicolor TG797n V3 issued by Telstra in Australia. It’s definitely Broadcom but I cannot find out the exact chipset. I pulled it a apart but there is a heat sink in the way. I think this might be a different chipset (as it does not support VDSL) but still in BCM63XX range.

What I was seeing in Wireshark was the following: http://imgur.com/b8PSu8W

LikeLiked by 1 person

Hi Louis, thanks for reporting back.

Based on the discussion on IRC, it definitely sounds like the same issue, especially if it’s in the same BCM63XX range. At least the bright side is that it’s a simple $29 box-swap fix, as annoying as it may be.

Broadcom have provided us a patch to test with, but I’m not sure what their plans for it internally are. I assume that they’ll roll it into their main code at some point too.

LikeLike

Hi,

Thanks for clarifying this. I am experiencing a similar issue after my ISP (Telenor Denmark) changed router to use the Technicolor TG799vac Xtream router.

Can you tell me how you went about disabling net flow on your router?

Can you wrote “Myth confirmed, bug logged upstream with Broadcom” – can you tell anything about status at Broadcom – if they have fixed this yet or if there is any official bug report available?

LikeLike

By “net flow” I meant “flow cache” of course 🙂

LikeLike

Hi Kristian,

“fap disable” just disables the hardware fastpath, and “fc disable” disables the flow cache (which also disables fastpath)

I’m not sure which version things were fixed in, but it was fixed.

LikeLike

Some devices don’t have the Broadcom supplied fcctl built for them – so I made a tiny (<2kb binary size) program you can copy onto MIPS based Broadcom SoC devices that don't come with fcctl, to disable the Flow Cache: https://gitlab.com/A1kmm/killfc

LikeLiked by 1 person